This post was most recently updated on March 17th, 2025

Are you aware that over 42% of all internet traffic is generated by bots? That’s right, according to statista.com, a staggering 42.3% of all internet activity is invalid traffic activity. With technology advancing rapidly, it’s not surprising that bots have become such a prevalent force on the web. But it’s important to note that these bots can wreck publishers’ AdSense accounts when undetected.

This article breaks down everything you need to know about bot traffic and the best solution to detect and block IVT for good.

Not all bots are designed to inflate traffic and with the intention to defraud marketers. As we live in an automated environment, some bots exist to perform specific and repetitive tasks that would be impossible or difficult for humans to execute with high speed. This includes tasks such as harvesting content, scraping data, capture analytics, etc.

Distinguishing good bots vs. bad bots does require a bit of a discerning eye, but it can be done if you have the right information.

These are the most dangerous bots. Imposter bots mask themselves as legitimate visitors. They have much more malicious intent than just generating a false click count as their purpose is to bypass online security measures. They’re often the culprit behind distributed denial of service (DDoS) attacks. They may also inject spyware onto your site, or appear to be a fake search engine, among other things.

Click bots are the kind that fraudulently clicks on ads, causing website analytics to be skewed. If your analytics data show incorrect metrics, you may be making wrong marketing decisions. This malicious bot is especially harmful to marketers who are using pay-per-click campaigns. The clicks generated by these bots add up to wasted dollars on fake visits that didn’t even come from humans, let alone their audience.

These bots are designed to artificially inflate download counts for software, music, videos, or other digital content. They do this by repeatedly downloading the same file, making it appear more popular than it actually is. This type of bot is often used by developers or content creators to boost their visibility and gain an unfair advantage over their competitors.

Web scrapers achieve the opposite effect as copyright bots. Instead of protecting proprietary content, scraper bots steal content and repurpose it elsewhere. This can include everything from text and images to product prices and inventory information. While some scraping is legitimate, such as for academic or research purposes, many scrapers are used to steal proprietary content and pass it off as their own. This can harm the original content creators and website owners.

These are the most common bots that distribute “spammy” content like unwarranted emails, posting spam in comments, spreading phishing scams, etc. They can also be used to conduct negative SEO campaigns against competitors. These bots are typically used by nefarious actors or businesses to gain an unfair advantage or to spread malicious content.

This is the kind of bot that mines sensitive information about individuals and businesses. It can gather login credentials, credit card data, and email addresses from websites, newsgroups, chat-room conversations, etc. This information can then be used for nefarious purposes, such as identity theft, creating deep fakes, or targeted phishing scams.

According to the definitions mentioned above, you can see why some bots can negatively affect your publishing business. Some may not pose any real risks and cause nothing more than just annoyance. However, as a publisher, it is important to note that Ad Networks are not only concerned with publishers, but advertisers as well.

They do regular quality checks to ensure that the websites they add to their network are free from fraudulent bots. Sadly, whether the publisher is aware of the invalid traffic running on their sites or not, Ad Networks are inclined to protect their advertisers more.

They would not think twice about banning a website that does not adhere to their policies. Publishers are entirely responsible for ensuring that their site is free from these unwanted bots.

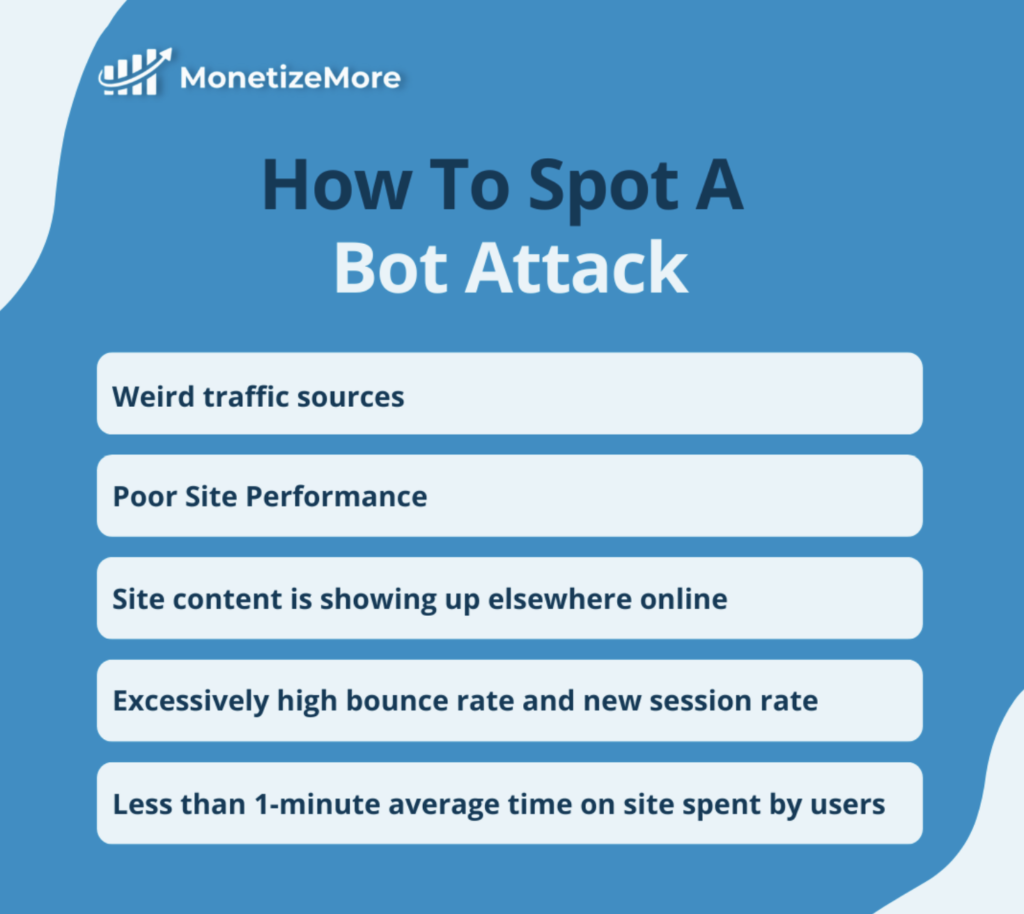

If you have noticed an unusual increase/ decrease in your analytics metrics, then this may be an indication that your site has a high susceptibility to fraudulent traffic. There are usually signs that your site is being overrun with bots, and you need to be aware of them. Watch out for the following:

#1: Excessively high bounce rate and new session rate.

#2: Site content is showing up elsewhere online. This may indicate that scraper bots have visited your site.

#3: Poor Site Performance. If you noticed that the website runs slower and crashes frequently, it might be overrun with bots.

#4: The source of traffic seems weird (irrelevant geographical location). For instance, if your site is in English, but most of your traffic comes from non-English speaking countries.

#5: Your top referring domains (referral links) are one of the following:

-Spammy (not at all related to the site, contains pure links without content)

-Malicious (may contain malware)

-Suspended sites

#6: Less than 60 seconds average time on site spent by users. If your site’s content is long-form and requires your audience to stay on your pages for a long time, having a short average visit may mean that your visitors are not human.

There are some preventive measures you can do on your own like implementing CAPTCHAs on forms and using a quality website builder for your site to be protected against hackers and their malware. You may try filtering known bots when performing analytical analyses, but it is practically impossible to block bot traffic, and to be honest, you shouldn’t preemptively block bot traffic as not all bots are bad. Addressing it as needed would be the better approach.

In conclusion, it is vital for any online business to understand the detrimental effects that bots and invalid traffic can have on their ad revenue. Implementing an award-winning solution like ‘Traffic Cop‘, which acts as a gatekeeper for incoming traffic, can be the difference between a thriving business and one that is constantly struggling to stay afloat. With Traffic Cop, you can rest easy knowing that your ad revenue is protected and you won’t have to worry about clawbacks or suspension from advertising partners. Don’t let bots and invalid traffic eat away at your hard-earned revenue any longer.

Take action now and safeguard the future of your online business with ‘Traffic Cop’

Detecting bot traffic isn’t always easy. You can look for signs such as very high bounce rates, strange referral traffic sources, and traffic from irrelevant geographical locations in your Google Analytics accounts. We provide more information about detecting bot traffic in our blog post.

To prevent bot traffic, you could block specific sources from accessing your site in your robots.txt file. To prevent invalid traffic and bot traffic that can harm your publisher business by clicking on your ads, consider using an invalid traffic detection and prevention service such as Traffic Cop. Traffic Cop uses machine learning and fingerprinting algorithms to detect and block invalid traffic from viewing and clicking on your ads.

Generally, bots visit your site to gather information or perform a specific task. There are many different types of bots, with some being good and others bad. Examples of good or normal bots include SEO crawlers, search engine bots, and copyright bots, to mention a few. Bots that you don’t want on your site are web scrapers, spambots, and those that click on your ads.

With over ten years at the forefront of programmatic advertising, Aleesha Jacob is a renowned Ad-Tech expert, blending innovative strategies with cutting-edge technology. Her insights have reshaped programmatic advertising, leading to groundbreaking campaigns and 10X ROI increases for publishers and global brands. She believes in setting new standards in dynamic ad targeting and optimization.

10X your ad revenue with our award-winning solutions.